A third diving infraction results in a $2,000 US fine; a fourth warrants a one-game suspension. "One of the impediments to the enforcement of hooking and holding and interference was the diving," Campbell pointed out. "Or the embellishment of those calls to draw a penalty. We knew this would happen because players are competitive and they do what they have to do to win the game," explained Colin Campbell, the NHL's disciplinarian. "So this is how the players and the managers have asked us to do handle it."

Avery was recently fined 0.09% of his annual income and I guess that means it’s time to write up about diving again. A number of people liked my diving article written a while back, which suggested unless the NHL makes drastic changes to their rules they will be unable to control diving as players still benefit more than they lose by diving. I wanted to write a continuation, with a little data this time. I first wanted the opinion from the NHL on how they’re doing on the diving front, I discovered that articles about diving are hard to find because it seems every hockey writer likes to say that goalies make a “diving save”, but that’s an entirely different issue, but I did find a little blurb at USAToday, which makes two simple arguments about the positives associated with diving enforcement this season.

At this point last season, Walkom says, about 20 diving penalties had been called. "We are probably closer to 30 this season."

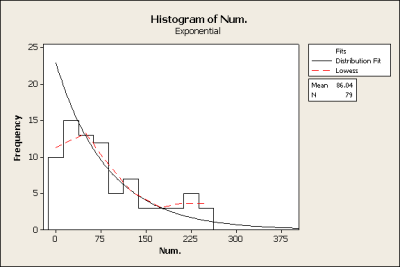

Walkcom is very correct diving penalties are up about 50% this year, but as I often say 50% of nothing is well nothing. Last season there were 109 diving calls. At this point I have 25 in 2005-2006 and 35 this year, meaning of course that since the article was written things have been a lot closer to even. So whether the NHL maintains their level of diving calls is questionable (as they want to make a statement at the beginning of the season so they can have nice quotes in articles).

The other difference, according to Walkom, is about half of the diving calls this season were called without being connected to another penalty. Last season, diving calls were primarily called in conjunction with a foul. For example, one team's player would be called for hooking, and the "hooked" player would be called for embellishing the fall to draw a referee's attention.

In 2005-2006 there were 109 diving calls 89 of which were associated with other penalties for the other team (82%). This year of the 36 diving calls 22 were associated with other penalties, which worse out to 61%, but increasing, that is to day after that article was written there has been a few less diving without hooking calls, I am always glad to see this increase, although in my opinion as long as the hook is still called the diving call has no value (there’s no cost). This means that in 266 hockey games there were 14 diving calls without a penalty on the opposing team. 14 penalties become about 2.5 goals against. Over the course of the season this is about 65 power plays and about 12 goals against, distributed amongst 30 teams, so this cost each team about 0.07 wins (~$70,0001), I’d say most players would call that just the cost of doing business.

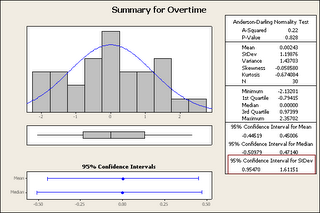

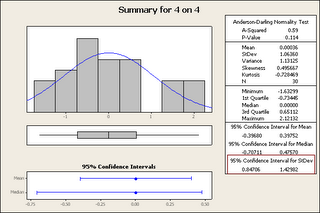

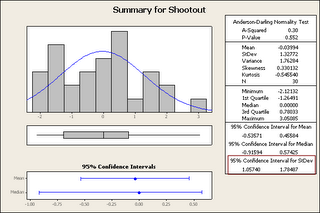

There were 1251 “subjective” calls this season, which includes, hooking, tripping, interference and holding the stick all of which I consider are “dive-able” I’m sure people could think of more, or debate my choices of penalties, but that wont effect this analysis significantly. Of these 1251 penalties 48 were associated with a call to the other team at the same time so 1203 should result in a power play, which of course is 86 times as many as the diving calls. Just looking at that ratio 86:1 one can figure out you should probably still dive. There is no good way to determine the number of players that actually diving, but if you look at 2005-2006 there were 84 players penalized for diving and I have 762 players listed in my database with more than 20 games played, which works out the NHL declaring that 11% of players dive. Now why would these players not dive for almost every call, but I’ll leave that for the readers to figure out. So let’s say that 11% of the above calls were associated with diving 138 and then we know there have been 36 diving calls, or 26% of dives resulted in diving calls (this is pretty high), of these dives 40% of them resulted in only the diving call, which works out to 12% of dives resulted in just a diving call. So here’s the choice to dive: 74% power play – 16% 4 on 4 – 10% just diving call. It should be obvious that the choice would be to dive, unless that $1,000 fine is that big of a deterrent to someone who makes >$500,000.

Of course the 138 dives in the first 266 games is about 638 over the season with 472 power plays for the diver, or about 80 goals distributed amongst 30 teams or 2.6 goals per team, which works out 0.5 of a win or about $500,000, that’s of course assuming the NHL has a fixed number of divers, if you start to assume every player dives 50% of the time for example these numbers will really start to get big, but as you can see $500,0001 is greater than $70,0001 or 2.6 goals for completely dominates the cost of 0.4 goals against. Of course it’s hard to say what percentage of the time a power play would be called without the dive.

This wouldn’t be as interesting if I just talked about the big picture; each diving call has a diver associated with it and a referee who calls it. For example last season only 4 players got more than 2 diving calls against them, Ilya Kovalchuk had 4, here’s a dominant player who can skate circles around defense it should be no surprise that a guy like Kovalchuk is on the correct end of a lot of calls if he’s actually diving or just getting diving calls because he’s always being hooked and referees randomly call dives would be hard to determine. There are three tied for 2nd and the list includes Gaborik, Zubrus and Afiniganov, now why this list includes 4 Russians (Czechoslovakia or USSR born) is a good question, are Russians bad actors, do Russians have less integrity or do the NHL referees have a bias against Russians (Don Cherry theory)? For my part I won’t conclude any of the three, but say it’s interesting that there are four Eastern European skaters in the top 4. However, the important thing is that all these skaters are strong, fast and likely draw a lot of their teams’ penalties, I bet the three diving calls didn’t hurt any of their teams. Of course if Kovalchuk gets 4 again this season he’ll have a one game suspension, if you estimate Kovalchuk’s value of the season to be about 5 wins (based on player contribution), that works out to 0.06 wins for the suspension or doubles the cost to the team, but still doesn’t exceed the benefits. Of course Kovalchuk will loose a lot of money with a one game suspension.

It’s interesting, but you need referees to make these calls, and well spotting a dive isn’t the same as spotting a hook as it’s subjective, one referees dive is another fall. There are a few this season who call over 0.2 dives per game in 2006-2007: Brad Watson, Dennis LaRue, Dean Morton, Michael McGeough, Brad Meier, Dan O'Rourke, or above 0.2 in 2005-2006: Stephane Auger, Dan O'Rourke, Bob Langdon, but mostly a lot of the referees don’t call any diving penalties (<0.1), style="">

Personally I believe every player dives to a certain extent, it’s when the player goes over a certain NHL determined line that they call the diving penalties. Everyone I’m sure has seen replays of a stick getting very close to someone’s face and their head whipping backwards when it doesn’t touch, of course this could be the reaction to something shoved that close to your face or it could be an embellishment. You of course could do studies on “correct” reactions to things like sticks being shoved very close to someone’s face in order to determine what embellishment is. The same goes for a hook, once the player feels the hook, just stop exerting any balance force and fall naturally, that’s an undetectable dive. I’m sure everyone has seen this one a player holds onto another players stick under their arm and when the player pulls to get their stick lose the other player falls. There are many ways players have found to get power plays and most of them aren’t very honest, but the NHL has chosen to encourage them by calling the penalties and not having much if any consequences for doing so. I wish the NHL good luck, but their enforcement of diving is going no where.

[1] - I approximate the value of a win based on the salary cap of approximately $41 million. That is to say the average number of wins is 41 and the average team spending is $41 million, so one win is worth around $1 million. And it takes about 5.5 marginal goals to get a win.